Read more announcements from Azure at Microsoft Build 2024: New ways Azure helps you build transformational AI experiences and The new era of compute powering Azure AI solutions.

At Microsoft Build 2024, we are excited to add new models to the Phi-3 family of small, open models developed by Microsoft. We are introducing Phi-3-vision, a multimodal model that brings together language and vision capabilities. You can try Phi-3-vision today.

Phi-3-small and Phi-3-medium, announced earlier, are now available on Microsoft Azure, empowering developers with models for generative AI applications that require strong reasoning, limited compute, and latency bound scenarios. Lastly, previously available Phi-3-mini, as well as Phi-3-medium, are now also available through Azure AI’s models as a service offering, allowing users to get started quickly and easily.

The Phi-3 family

Phi-3 models are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks. They are trained using high quality training data, as explained in Tiny but mighty: The Phi-3 small language models with big potential. The availability of Phi-3 models expands the selection of high-quality models for Azure customers, offering more practical choices as they compose and build generative AI applications.

There are four models in the Phi-3 model family; each model is instruction-tuned and developed in accordance with Microsoft’s responsible AI, safety, and security standards to ensure it’s ready to use off-the-shelf.

- Phi-3-vision is a 4.2B parameter multimodal model with language and vision capabilities.

- Phi-3-mini is a 3.8B parameter language model, available in two context lengths (128K and 4K).

- Phi-3-small is a 7B parameter language model, available in two context lengths (128K and 8K).

- Phi-3-medium is a 14B parameter language model, available in two context lengths (128K and 4K).

Find all Phi-3 models on Azure AI and Hugging Face.

Phi-3 models have been optimized to run across a variety of hardware. Optimized variants are available with ONNX Runtime and DirectML providing developers with support across a wide range of devices and platforms including mobile and web deployments. Phi-3 models are also available as NVIDIA NIM inference microservices with a standard API interface that can be deployed anywhere and have been optimized for inference on NVIDIA GPUs and Intel accelerators.

It’s inspiring to see how developers are using Phi-3 to do incredible things—from ITC, an Indian conglomerate, which has built a copilot for Indian farmers to ask questions about their crops in their own vernacular, to the Khan Academy, who is currently leveraging Azure OpenAI Service to power their Khanmigo for teachers pilot and experimenting with Phi-3 to improve math tutoring in an affordable, scalable, and adaptable manner. Healthcare software company Epic is looking to also use Phi-3 to summarize complex patient histories more efficiently. Seth Hain, senior vice president of R&D at Epic explains, “AI is embedded directly into Epic workflows to help solve important issues like clinician burnout, staffing shortages, and organizational financial challenges. Small language models, like Phi-3, have robust yet efficient reasoning capabilities that enable us to offer high-quality generative AI at a lower cost across our applications that help with challenges like summarizing complex patient histories and responding faster to patients.”

Digital Green, used by more than 6 million farmers, is introducing video to their AI assistant, Farmer.Chat, adding to their multimodal conversational interface. “We’re excited about leveraging Phi-3 to increase the efficiency of Farmer.Chat and to enable rural communities to leverage the power of AI to uplift themselves,” said Rikin Gandhi, CEO, Digital Green.

Bringing multimodality to Phi-3

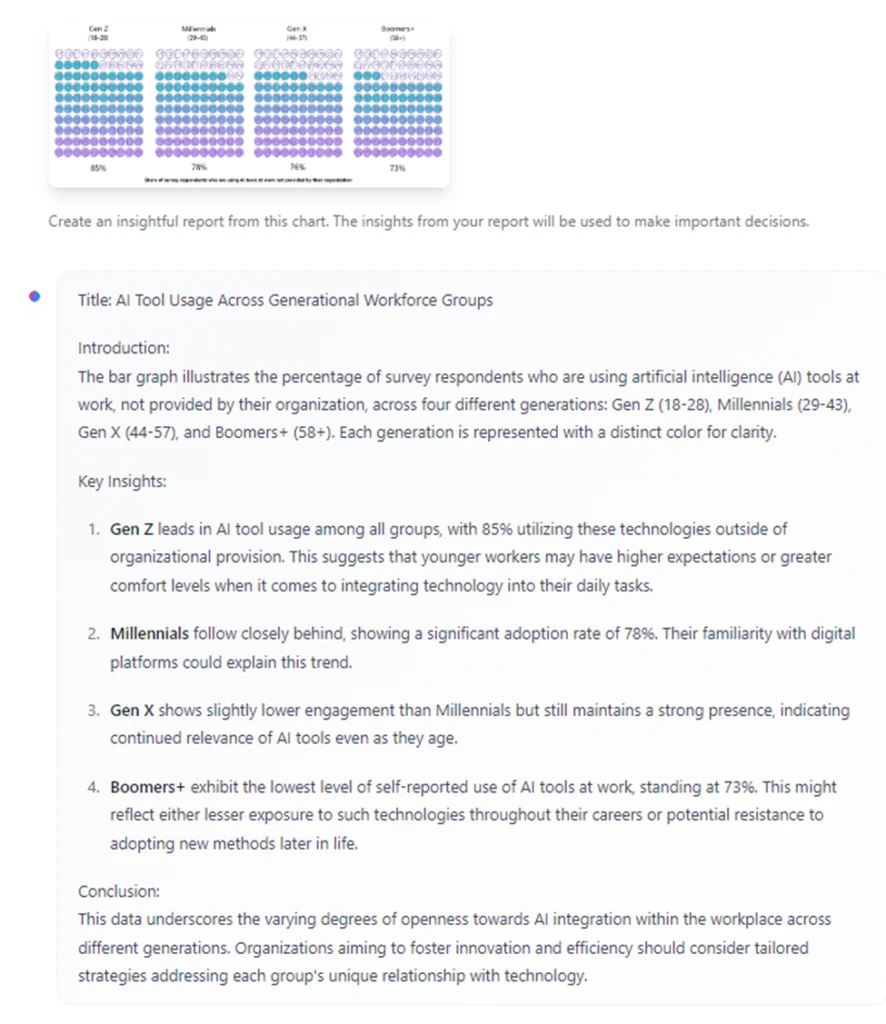

Phi-3-vision is the first multimodal model in the Phi-3 family, bringing together text and images, and the ability to reason over real-world images and extract and reason over text from images. It has also been optimized for chart and diagram understanding and can be used to generate insights and answer questions. Phi-3-vision builds on the language capabilities of the Phi-3-mini, continuing to pack strong language and image reasoning quality in a small model.

Phi-3-vision can generate insights from charts and diagrams:

Groundbreaking performance at a small size

As previously shared, Phi-3-small and Phi-3-medium outperform language models of the same size as well as those that are much larger.

- Phi-3-small with only 7B parameters beats GPT-3.5T across a variety of language, reasoning, coding, and math benchmarks.1

- The Phi-3-medium with 14B parameters continues the trend and outperforms Gemini 1.0 Pro.2

- Phi-3-vision with just 4.2B parameters continues that trend and outperforms larger models such as Claude-3 Haiku and Gemini 1.0 Pro V across general visual reasoning tasks, OCR, table, and chart understanding tasks.3

All reported numbers are produced with the same pipeline to ensure that the numbers are comparable. As a result, these numbers may differ from other published numbers due to slight differences in the evaluation methodology. More details on benchmarks are provided in our technical paper.

See detailed benchmarks in the footnotes of this post.

Prioritizing safety

Phi-3 models were developed in accordance with the Microsoft Responsible AI Standard and underwent rigorous safety measurement and evaluation, red-teaming, sensitive use review, and adherence to security guidance to help ensure that these models are responsibly developed, tested, and deployed in alignment with Microsoft’s standards and best practices.

Phi-3 models are also trained using high-quality data and were further improved with safety post-training, including reinforcement learning from human feedback (RLHF), automated testing and evaluations across dozens of harm categories, and manual red-teaming. Our approach to safety training and evaluations are detailed in our technical paper, and we outline recommended uses and limitations in the model cards.

Finally, developers using the Phi-3 model family can also take advantage of a suite of tools available in Azure AI to help them build safer and more trustworthy applications.

Choosing the right model

With the evolving landscape of available models, customers are increasingly looking to leverage multiple models in their applications depending on use case and business needs. Choosing the right model depends on the needs of a specific use case.

Small language models are designed to perform well for simpler tasks, are more accessible and easier to use for organizations with limited resources, and they can be more easily fine-tuned to meet specific needs. They are well suited for applications that need to run locally on a device, where a task doesn’t require extensive reasoning and a quick response is needed.

The choice between using Phi-3-mini, Phi-3-small, and Phi-3-medium depends on the complexity of the task and available computational resources. They can be employed across a variety of language understanding and generation tasks such as content authoring, summarization, question-answering, and sentiment analysis. Beyond traditional language tasks these models have strong reasoning and logic capabilities, making them good candidates for analytical tasks. The longer context window available across all models enables taking in and reasoning over large text content—documents, web pages, code, and more.

Phi-3-vision is great for tasks that require reasoning over image and text together. It is especially good for OCR tasks including reasoning and Q&A over extracted text, as well as chart, diagram, and table understanding tasks.

Get started today

To experience Phi-3 for yourself, start with playing with the model on Azure AI Playground. Learn more about building with and customizing Phi-3 for your scenarios using the Azure AI Studio.

Footnotes

1Table 1: Phi-3-small with only 7B parameters

2Table 2: Phi-3-medium with 14B parameters

3Table 3: Phi-3-vision with 4.2B parameters

The post New models added to the Phi-3 family, available on Microsoft Azure appeared first on Azure Blog.